2024 DIMACS REU Projects

DIMACS is very proud for the achievements of the 2024 DIMACS REU students during their summer research experience. This page includes the abstracts of the final papers that the students submitted to the program, summarizing their summer research.

Todor Antic (Charles University (Prague, Czech Republic)) & Guillermo Gamboa (Charles University (Prague, Czech Republic)) & Jelena Glisic (Charles University (Prague, Czech Republic)) & Patrik Zavoral (Charles University (Prague, Czech Republic))

On Approximating Diameter with Outliers

Mentor: Karthik Srikanta, Computer Science

Abstract: We study the furthest pair with outliers problem, where the goal is to identify a given number of outlier points such that the diameter of the remaining pointset is minimized. This is closely related to various clustering problems such as the Max-k-diameter with outliers. We show that the furthest pair with outliers is NP-complete by a reduction from the independent set. Further, we share found limits of (in)approximability for the furthest pair with outliers for the usual ell-p metrics. We report that 4 + ε approximation is efficiently computable and that no PTAS exists for the problem.

Ben Bencik (Charles University (Prague, Czech Republic))

Testing a start in a graph

Mentor: Sumegha Garg, Computer Science

Abstract: Property testing is a notion of approximation for decision problems, where given a property, the task is to distinguish whether a given instance has this property or is "far" from any instance having the property. A key complexity measure for property testing algorithms is its query complexity, which is the maximum number of input elements queried to determine whether the graph satisfies a given property. While there are established bounds for the query complexity of various graph properties, there are hardly any results on the impact of memory constraints. This project investigates property testing on graphs, studying how memory constraints affect the complexity of property testing in both deterministic models and scenarios involving random queries.

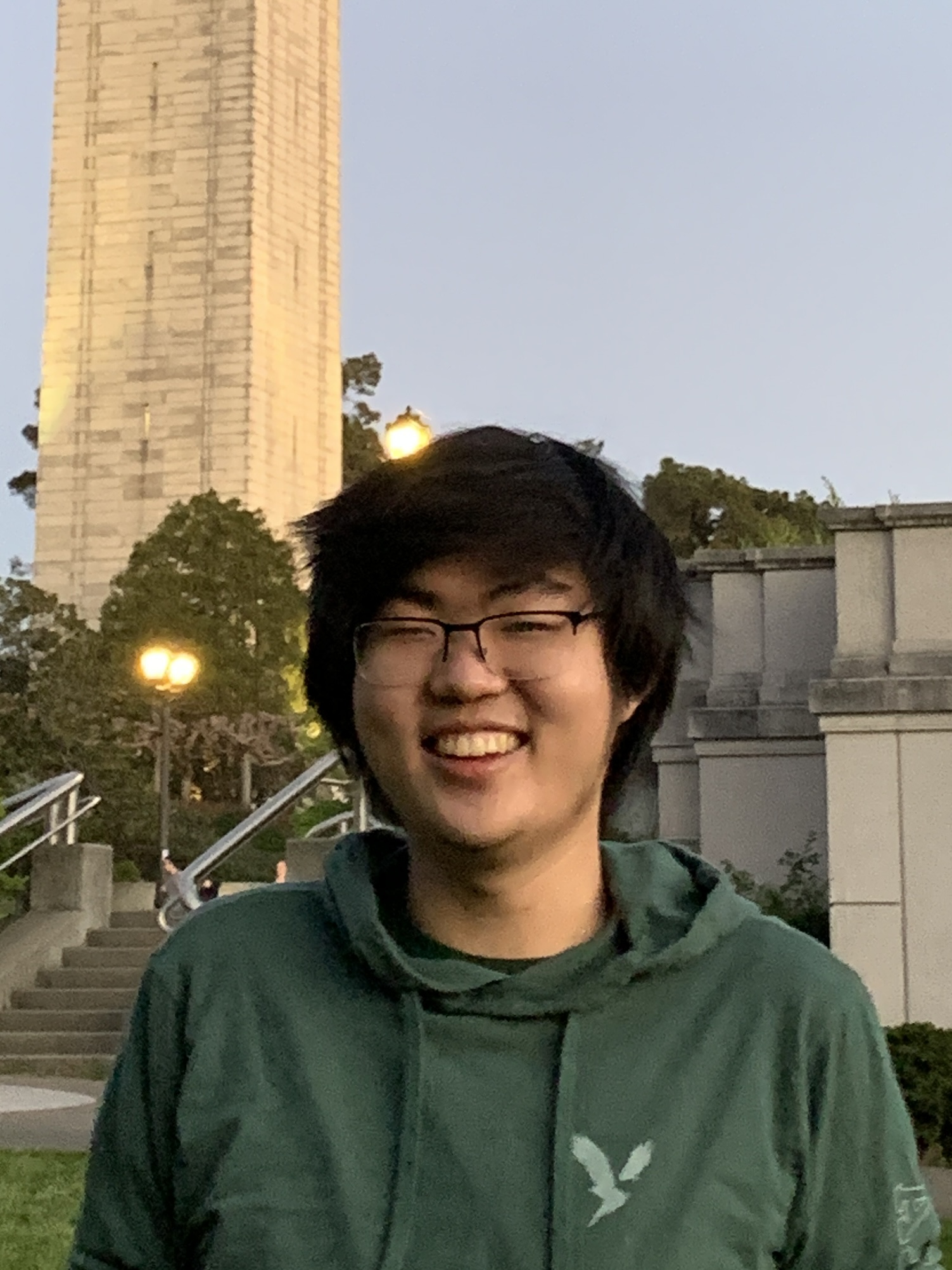

Thomas Chen (University of California-Berkeley CA)

Evaluating Differentially Private Synthetic Data Generators in Social Science Settings

Mentor: Ruobin Gong, Statistics

Abstract: As we continue to use large public datasets for studies and models, there are increasing concerns over the risk of sharing public data. Many studies have shown that potential malevolent agents can cross-reference public datasets to find out sensitive information about individuals in public datasets. One such theoretical remedy that has been suggested is to use differentially private synthetic datasets in place of the original dataset. However, no studies so far have evaluated these synthetic data generators on actual real-life studies and measured how effective they are at replicating results. In this work, we look at the current differentially private synthetic data generators and evaluate them on recent social science studies that use large public datasets. We focus on the synthetic data generator called DataSynthesizer and analyze how faithful it is in replicating results from the study using the Panel Study of Income Dynamics. We find that Datasynthesizer struggles with complex queries that consider more than just marginal distributions. Additionally, we examine how the quality of the synthetic data varies across different settings to give future guidance on how to provide quality differentially private synthetic datasets.

Katarina Cheng (Massachusetts Institute of Technology MA)

Toward Lower Bounds on Memory-Sample Tradeoffs for Learning Parity with Noise

Mentor: Sumegha Garg, Computer Science

Abstract: Proving the amount of storage necessary to learn under memory-constraints has important applications to machine learning and bounded-storage cryptography. In this project, we study memory-sample tradeoffs on the learning parity with noise problem (LPN) in the streaming model. The longterm goal is to prove a lower bound on memory-sample tradeoffs for LPN of Omega(n^2/eps^2) or an exponential number of samples. n parameterizes the size of the LPN secret, while epsilon parameterizes the sample noise. In this report, we describe intermediate observations, obstacles, and results toward proving the tradeoff within our modified branching program model, similar to prior work. Finally, we provide a conjecture and some intuition toward a weaker tradeoff, which instead tolerates a polynomial number of samples.

Ryan Ding (University of California-San Diego CA)

Protein-DNA Binding Site Prediction with Sequence-Dependent Force Field Energy Minimization

Mentor: Wilma Olson, Chemistry and Chemical Biology

Abstract: The conformational preferences of B-DNA sequences hold significant research value, particularly in the context of gene expression and DNA replication processes. Equally important are the interactions between protein structures that bind to these sequences and impact the conformational preferences of the complex. However, identifying the ideal binding site to which the protein should be bound on a sequence can be challenging, as differing locations may lead to less favorable conformations that impact DNA processes. We present a method that utilizes sequence-dependent force field models derived from high-resolution structures to predict the ideal binding site for a protein structure on a DNA sequence. We enhance existing energy minimization protocols within the emDNA software and conduct a case study using the DNA gyrase enzyme and a 601 base pair DNA minicircle. Through iteratively energy minimization procedure across a sequence with varying protein binding sites, we are able to calculate and derive low-energy states of the protein-DNA complex which may reveal ideal binding locations and thus make educated inferences that may assist in solving crystal structures.

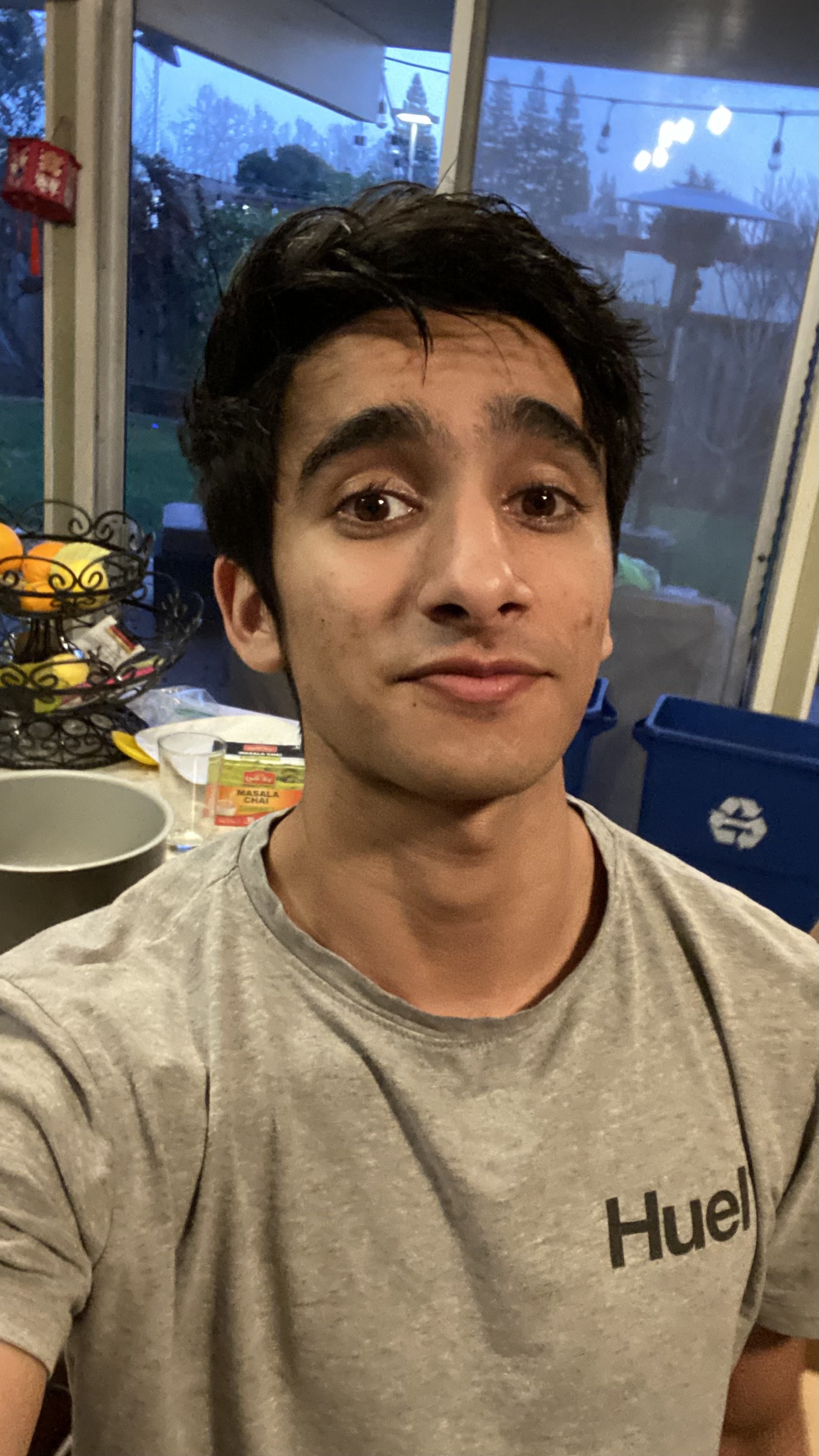

Abbas Dohadwala (Purdue University-Main Campus IN) & Ish Shah (Rutgers University-New Brunswick NJ)

When Fourier analysis meets ergodic theory and number theory

Mentor: Mariusz Mirek, Mathematics

Abstract: Ergodic theory is an area of mathematics which studies the statistical properties of dynamical systems. The Birkhoff ergodic theorem is a classical result of ergodic theory, which establishes pointwise convergence almost everywhere for a specific average under certain conditions. In this project, we worked towards proving an ergodic theorem with some similarities, but involving fractional powers of primes. With techniques from analytic number theory and Fourier analysis, we studied exponential sums involving these fractional powers of primes to obtain certain bounds. Using these bounds, we use harmonic analysis tools to prove a sequence of theorems, eventually leading to our desired result.

Adam Dzavoronok (Charles University (Prague, Czech Republic)) & Tymofii Reizin (Charles University (Prague, Czech Republic)) & Jakub Sosovicka (Charles University (Prague, Czech Republic))

Venn diagrams and related structures

Mentor: Bhargav Narayanan, Mathematics

Abstract: A hypergraph ℋ on {1 ... n} is just a collection of subsets of {1 ... n}. We say that there is a "k-Venn diagram" in ℋ if there are k sets ( A1, ... , Ak ) in ℋ such that all 2k intersections ( B1 ∩ ... ∩ Bk ) are non-empty where each Bi can either be Ai or the complement of Ai (equivalently, if we draw the Venn diagram associated with (A1, ... , Ak), all the regions of this picture are nonempty). Our goal is to try to understand the following question: how many sets can our hypergraph ℋ have if it fails to contain a k-Venn diagram?

Eli Friedman (Dartmouth College NH)

Densest Subgraph with Strong and Weak Signals

Mentor: Shahrzad Haddadan, Management Sciences and Information Systems

Abstract: We consider the problem of identifying the densest subgraph, one with many applications, in a novel computational setting in which we have access to only a noisy graph signal and limited queries to a strong oracle. Our algorithms solve this problem when the density of the densest subgraph is asymptotically larger than the noise of the weak signal. When the noise in the weak signal is small and the maximum density is Ω(log n), we show an algorithm that achieves a constant approximation guarantee. Alternatively, when the noise is large, we are only able to design an algorithm whose additive approximation guarantee deteriorates with the magnitude of noise. We conjecture that no algorithm can be designed to circumvent this difficulty without excessive use of the strong oracle.

Mahdi Hamad (Harvard University MA)

Cut/Flow Structures in Graphs with Terminals

Mentor: Zihan Tan, DIMACS

Abstract: This research extends the work of Chaudhuri et al. on terminal cut functions in multiterminal networks by developing a system of necessary inequalities for networks with six terminals. We delve into the mathematical foundations of flow networks, thoroughly review the contributions for the cases with three, four, and five terminals, and generalize these findings to six terminals. Additionally, we integrate insights from recent advancements by Zihan Tan and explore the implications of submodularity and laminar families in this context.

Robert Jaworski (Charles University (Prague, Czech Republic))

Understanding a platform's strategic entry into the marketplace

Mentor: Xintong Wang, Computer Science

Abstract: Recent years have witnessed the rise of online marketplaces (e.g., Amazon, App Store) that create great convenience to facilitate matchings and improve economic efficiency. While these platforms use third-party sellers data to facilitate better matchings, they have also leveraged those sales data to inform the decisions of entering the marketplace with their own products. We analyze historical data of Amazon products provided by Keepa.com in order to understand Amazon's entry strategy and analyze the impact on third party sellers.

Jasmine Khalil (Pennsylvania State University-Main Campus PA)

Coupling from the Past for Statistical Mechanics Models

Mentor: Pierre Bellec, Statistics

Abstract: Coupling from the past is a variation of traditional Markov Chain Monte Carlo methods and is used to create a perfect simulation of a model. Many MCMC algorithms sample from a close approximation of the stationary distribution. As a result, we end up with undesired convergence issues because we want to sample from the exact distribution. CFTP solves this issue and produces perfect samples from the desired stationary distribution. In this paper, we show how Coupling from the Past differs from ordinary MCMC and optimize it for a perfect simulation of a statistical mechanics model, the Ising model of ferromagnetic materials. We anticipate that our work may be a starting point for the simulation of a broader range of more sophisticated models with real-world applications relating to phase transition phenomena using this perfect sampling.

Justin Kim (Rutgers University-New Brunswick NJ)

Secure and Efficient Digital Signatures

Mentor: Periklis Papakonstantinou, Management Science and Information Systems

Abstract: Essentially all of cryptography requires the use of unproven assumptions, and much work is dedicated to relaxing these assumptions as much as possible. Digital signatures were thought to require public-key cryptographic primitives until 1989, when Naor and Yung proposed the class of Universal One-Way Hash Function families, which can be constructed from injective one-way functions, a private-key primitive. This assumption was later relaxed again to arbitrary one-way functions. Naor and Yung showed these hash functions are sufficient to construct efficient cryptographically secure digital signature schemes, however, in their original paper, many details and proofs are omitted for this claim. Most of this project is dedicated to analyzing and replicating Naor and Yung's result with full exposition, and we worked towards improving their scheme in different settings.

Tymur Kotkov (Charles University (Prague, Czech Republic))

Evaluating the Cooperative Potential of LLMs

Mentor: Xintong Wang, Computer Science

Abstract: Large Language Models (LLMs) are increasingly pivotal in enhancing human-AI interactions, yet their behavior in competitive environments remains underexplored. This study investigates whether LLMs can develop cooperative strategies in the Prisoner's Dilemma, knowing only the game rules and receiving no external decision-making support. We simulate an Axelrod's Tournament with a limited set of predefined strategies and one LLM agent, assessing its behavior based on successful strategy characteristics and tournament outcomes. Our findings reveal that the LLM adapts its decision-making process to each opponent, demonstrating an ability to balance cooperation and competition effectively. This adaptability suggests significant potential for deploying LLMs in complex, dynamic environments such as economics, diplomacy, and social governance. While further validation in real-world scenarios is necessary, these results provide a promising foundation for understanding how LLMs make choices within different environments and contexts.

Sofiia Kotsiubynska (Charles University (Prague, Czech Republic)) & Volodymyr Kuznietsov (Charles University (Prague, Czech Republic))

k-Stanley conjecture

Mentor: Swee Hong Chan, Mathematics

Abstract: In this project we studied properties of linear extensions of a poset and worked on proving/disproving conjectures related to them.

Julia Krizanova (Charles University (Prague, Czech Republic))

Dynamics in Truth Learning

Mentor: Jie Gao, Computer Science

Abstract: Consider a network N consisting of n agents, where all of them with ability to learn, want to correctly determine the value, i.e. state of the world they live in. Knowledge of each of the agents consists of his own private information and of actions that the other agents in the agent's neighborhood made before him. The actions of agents are being made in a sequential setting following an ordering σ. The goal is to achieve so called asymptotic truth learning on the whole network, meaning that almost all agents make a correct prediction of the current state of the world. To illustrate, when the number of agents n → ∞, then the probability of predicting the correct ground truth approaches one. In this paper we investigate and dive further into the potential relation of the truth learning and the field of statistical physics, and aim to perceive the problem from the dynamics point of view.

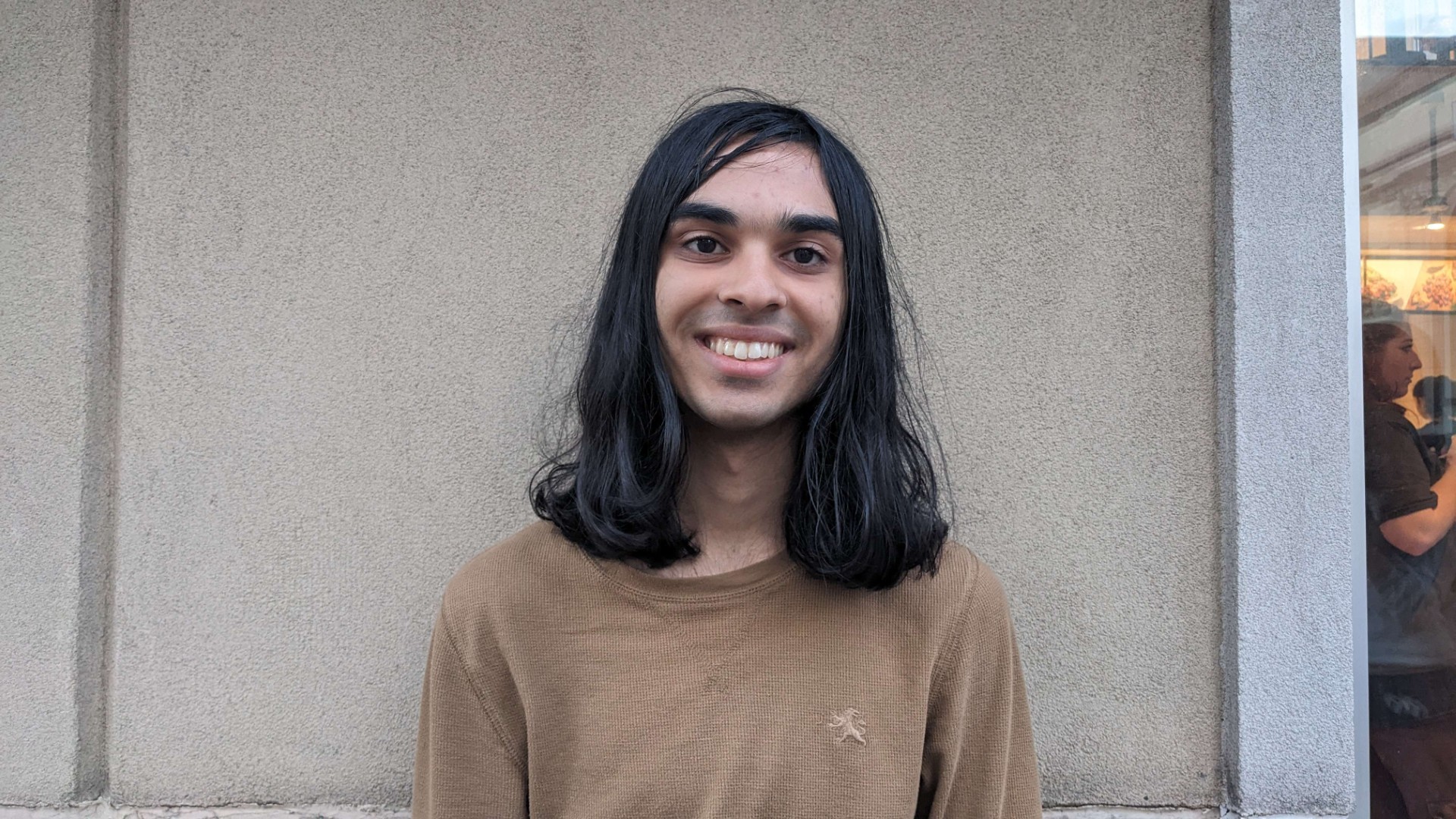

Edwin Lu (Brown University RI) & Shiv Yajnik (Columbia University in the City of New York NY)

Enumerating curves on surfaces

Mentor: Feng Luo, Mathematics

Abstract: We are interested in counting isotopy classes of curves on compact, topological, orientable surfaces of the form Σg,n, where g is the genus of the surface and n is the number of punctures. We are interested in surfaces which can be assigned a hyperbolic structure; namely, this requires the Euler characteristic χ(Σg,n) = 2-2g-n to be negative. In particular, we are not interested in spheres with at most 2 punctures or the genus 1 torus, as (1) those are not hyperbolic surfaces, and (2) those only contain boundary-parallel or nullhomotopic curves, which are not very interesting.

Starting in the late 2000s, M. Mirzakhani produced some major results concerning geodesic counting on hyperbolic surfaces. More recently, methods have been developed so that computations may not rely on hyperbolic geometry but rather a strictly topological and combinatorial point of view, even though the underlying structures may be hyperbolic. The notion of length that we use--called combinatorial length--is based on the number of intersections with special kinds of triangulations called ideal triangulations. Our goal is to find the number of curves on a given surface under a certain combinatorial length. A known and important fact is that isotopy classes of simple closed curves have a bijective correspondence with closed hyperbolic geodesics. Furthermore, while our definition of ideal triangulations is strictly topological, there is a construction of ideal triangulations in the language of hyperbolic geometry. One result of this is that combinatorial length is proportional to the geodesic length with respect to a hyperbolic metric. Therefore, our results will serve as estimations for geodesic counting problems.

Rhett Olson (University of Minnesota-Twin Cities MN)

The Effects of Adversarial Agents on Social Networks

Mentor: Jie Gao, Computer Science

Abstract: To make better decisions, agents often try to aggregate information from others. This phenomenon has been studied extensively through the lens of social networks. However, in real-world settings, one cannot always assume that others intend to make the best decision, or share their information honestly. Such agents act as adversaries for the task of social learning. This research investigates whether social networks can be used to aggregate information in a way that is robust against such adversaries. We present results on the robustness of two specific social networks: the Celebrity network and the Butterfly network. These results improve our understanding of how information can be aggregated effectively in social networks, both in networks with high-degree nodes and networks where all nodes have a constant degree.

Nigel Seymour (University of Maryland-Baltimore County MD)

Privately Estimating visualization using correlation

Mentor: Anand Sarwate, Electrical and Computer Engineering

Abstract: Researchers are interested in using data to demonstrate the results of health and human behavior of individuals. In sharing data, researchers have begun to shift to utilizing more data visualization techniques, and while these charts and graphs are visually more aesthetically pleasing, it is important to not violate the privacy of individuals who have reported their information. These data points must be protected using different techniques. While many focus on both qualitative support and quantitative results, the focus on the outputs must use algorithms to ensure that the data privacy remains secure and protected. I experimented using a linear correlation formula to understand how correlation works with different pieces of data from the US Census. Our goal for this project is to create differentially private correlation estimates.

Dmitriy Shvydkoy (University of Illinois at Urbana-Champaign IL)

Triple Product L-function

Mentor: Michael Woodbury, Mathematics

Abstract: Triple product L-functions are of great interest in number theory and quantum physics. Trilinear forms have deep connections with these L-functions, and these forms are the main objects of study in this work. This project explored the relation between two separate trilinear forms of the group GL(2, K), for some finite field K. We first used Sage to gather a large amount of experimental computations of these trilinear forms for small values of q = |K|. Then using the computational results as insight, we proved these results for general q.

Shreya Sinha (Princeton University NJ)

Mathematical Modeling of Wrinkles in Thinly Curved Sheets

Mentor: Ian Tobasco, Mathematics

Abstract: What rules dictate the wrinkle-patterns in general, simply-connected curved sheets? This project aimed to expand on results in the case of simply-connected sheets to non-simply connected sheets. The overall goal was to find a relation between the upper and lower convex envelopes of admissible convex functions (ones that satisfied certain requirements in order to successfully model wrinkle patterns), which we achieved and wish to further understand and simplify. We found concrete functions that solved the optimization problem in the case of an annulus, and found leads into the cases of a shifted annulus (where the hole is not centered on the disk) as well as have some idea as to how to solve the case of a disk with concentric regions of differently-signed curvature.

Filip Uradnik (Charles University (Prague, Czech Republic)) & Amanda Wang (Princeton University NJ)

NP-hardness of Finding Optimal Learning Rate in a Sequential Social Network

Mentor: Jie Gao, Computer Science

Abstract: Sequential learning models situations where agents predict a ground truth in sequence, having access to their private, noisy measurements, and the predictions of agents who came earlier in the sequence. We study a generalization of this model to networks, where agents only see a subset of the previous agents' actions - those in their own neighborhood. We consider a setting where agents' rationality is bounded, only basing their decisions on a simple majority rule. The fraction of agents who predict the ground truth correctly depends heavily on the ordering in which the predictions are made. An important question in this area is whether there exists an ordering, under which the agents predict the ground truth correctly with high probability. In our project, we show that it is in fact NP-hard to answer this question for a general network for agents with bounded rationality.

Ruth Velasquez (Florida International University FL)

Genomic Data-Guided Computational Modeling of Cancer

Mentor: Subhajyoti De, Rutgers Cancer Institute of New Jersey

Abstract: Cells in the body are continuously multiplying and dying, a process that releases nucleosomal free-floating DNA into the bloodstream. This DNA, originating from various tissues, offers valuable insights into the state and progression of diseases, particularly cancer. By analyzing this circulating DNA, researchers can pinpoint the origin of the DNA and track the evolution of tumors. Our project focuses on leveraging computational algorithms and advanced mathematical techniques to detect and interpret patterns in this free-floating DNA from blood tests. These patterns can provide critical information about the patient's condition, including the emergence of drug resistance. Specifically, we aim to develop a method that not only identifies the presence of drug resistance but also constructs a timeline of its development. Early detection of drug resistance is crucial for timely intervention and effective treatment planning. Through this project, we hope to refine our understanding of the dynamics of drug resistance and improve the precision of personalized medicine. By combining computational tools with biological data, our goal is to enhance the early detection and management of cancer, ultimately contributing to better patient outcomes.

Jinghan Zeng (University of Illinois at Urbana-Champaign IL)

Clock auctions as optimal independent sets on graphs

Mentor: Daniel Schoepflin, DIMACS

Abstract: This project focused on clock auctions, which are auctions where the price is gradually raised according to a clock and the item is awarded to the last bidder who stays in the auction. We studied how clock auctions perform on graphs, where the agents are the vertices, and our goal is to serve an item to an independent set on a graph with the aim of maximizing the total welfare. The three types of graphs we focused on were the K1,2, star graph, and serving a matching on a graph with weighted edges rather than weighted vertices. For the K1,2 case, we gave a randomized auction with an approximation ratio close to the current best upper bound. We also lowered the upper bound on the approximation ratio of a randomized auction over a star graph. Finally, we gave a deterministic auction for the matching case, which improves the current best auction in the literature.

Return to REU Home Page

Return to REU Home PageDIMACS Home Page

DIMACS Contact Information

Page last modified on August 22, 2024.